Web Scraping in Python: Automating Real Data Collection with Ease

Web scraping with Python is a technique utilized to scrape information from a website, allowing you to harvest data for analysis, price monitoring, news aggregation, and the like. The operation is conducted through tools tailored to accomplish the same, which are known as web scrapers. While every programming language has the capability of carrying out data scraping on websites, the use of python remains the most prevalent due to its readable code, extensive libraries, and continued development.

In this tutorial, we will discuss the basic tools of web scraping with Python and walk through an example implementation. By following our instructions, you'll be able to create a simple scraper, avoid common pitfalls, and optimize your workflow.

1. Requests

A simple yet powerful library for sending HTTP requests and fetching HTML data.

- ✅ Ideal for small-scale scraping tasks.

- ✅ Great for basic static sites.

- ❌ Not suitable for handling JavaScript-generated content.

2. Aiohttp

An asynchronous HTTP client for handling multiple requests simultaneously.

- ✅ Best for large-scale scraping data with high performance.

- ✅ Handles concurrent requests efficiently.

3. Lxml

A powerful library for parsing the HTML document$ and XML.

- ✅ Supports XPath and XSLT for advanced parsing.

- ✅ High-speed processing.

4. BeautifulSoup

A user-friendly parsing library that extracts data from an HTML document.

- ✅ Works well with messy or poorly structured HTML content.

- ✅ Multiple parser options (built-in, lxml, html5lib).

5. Scrapy

A robust web scraping framework with built-in support for data processing.

- ✅ Handles asynchronous requests.

- ✅ Suitable for large-scale projects.

6. Selenium

A browser automation tool that mimics user interactions.

- ✅ Useful for scraping dynamic websites with JavaScript content.

- ❌ Slower than Requests and BeautifulSoup.

7. Pyppeteer

A Python port of Puppeteer for controlling a headless browser.

- ✅ Automates web browsing tasks.

- ✅ Ideal for scraping complex websites.

8. Playwright

A next-gen automation tool supporting multiple browsers and programming languages.

- ✅ Supports Chromium, Firefox, and WebKit.

- ✅ Multi-threaded execution for efficiency.

Before writing a scraper, it’s crucial to understand the HTML document structure. Websites are composed of elements such as:

- <html>: Root element.

- <head>: Metadata and page title.

- <body>: Visible content, including:

- <h1>, <h2>, … <h6>: Headings.

- <p>: Paragraphs.

- <a>: Link in links.

- <img>: Images.

- <div>, <span>: Containers for styling and layout.

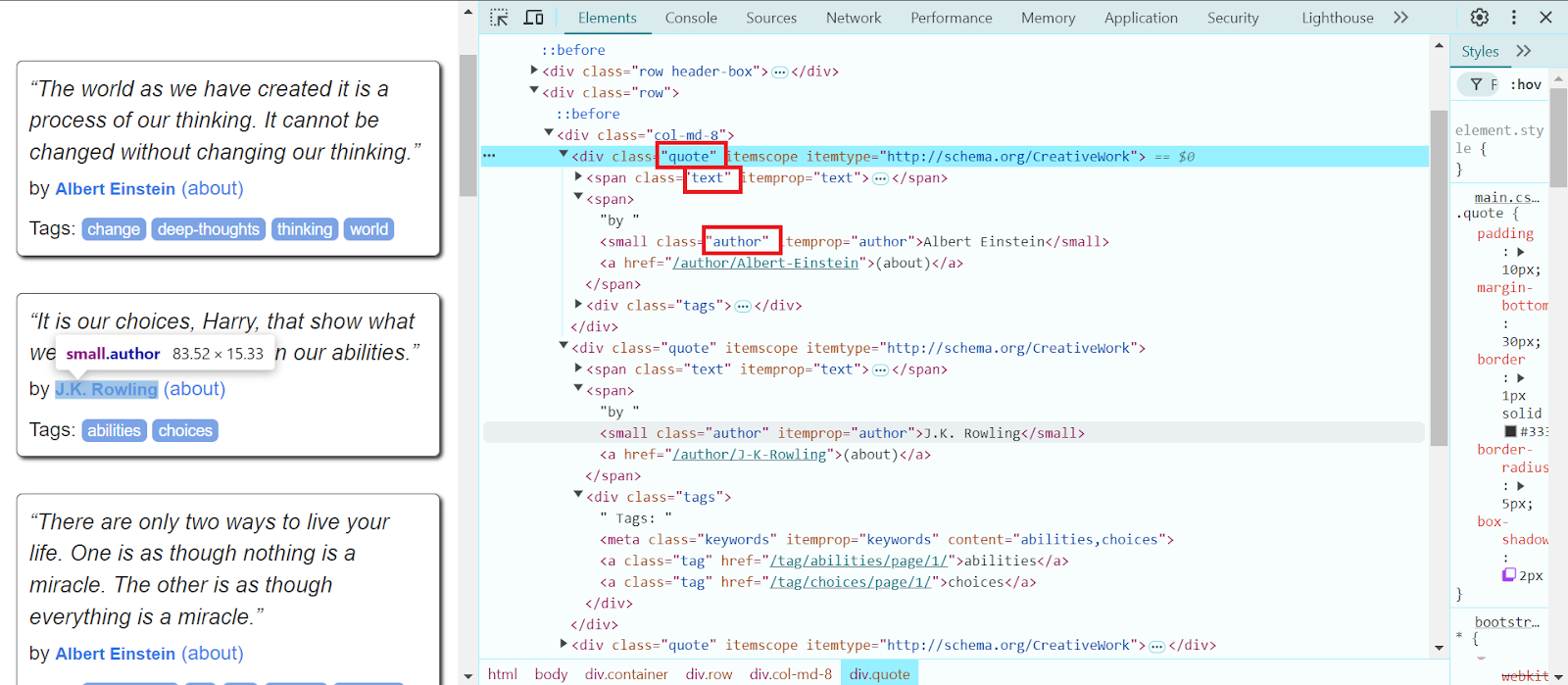

Finding Elements

Use browser Developer Tools (F12 → Elements) to inspect page structure. Look for:

- IDs (id='unique-id') – Use find(id='unique-id').

- Classes (class='example') – Use find_all(class_='example').

- Using CSS Selectors – Use select('.classname').

We’ll extract quotes from https://quotes.toscrape.com/ using Requests and BeautifulSoup.

1. Install Required Libraries

pip install beautifulsoup4 requests2. Implement the Scraper

import requests

from bs4 import BeautifulSoup

url = 'https://quotes.toscrape.com/'

headers = {'User-Agent': 'Mozilla/5.0'}

response = requests.get(url, headers=headers)

if response.status_code == 200:

soup = BeautifulSoup(response.text, 'html.parser')

quotes = soup.select('.quote')

for quote in quotes[:3]:

text = quote.select_one('.text').get_text(strip=True)

author = quote.select_one('.author').get_text(strip=True)

print(f'Quote: {text}\nAuthor: {author}\n')

else:

print(f'Failed to retrieve page. Status code: {response.status_code}')

Scraping code like this is essential for beginners to get started with Python-based web scraping.

Data scraping novices can begin with Python's libraries such as BeautifulSoup and Requests to scrape the data they require. As projects become more complex, Scrapy and Selenium offer more sophisticated capabilities. Always comply with a website's robots.txt file and legal issues before trying to scrape web pages.

Note: We'd like to remind you that the product is used to automate testing on your own websites and on websites to which you have legal access.